[i=s] 本帖最后由 风云龙一 于 2025-4-22 15:32 编辑 [/i]

功能介绍

你知道当你不在工位的时候,谁过来坐过吗?那谁是什么时间坐你这的?她/他坐你工作都在看哪?带着这些好奇,我用小安派BW21-CBV-Kit设计了一个工位监控神器。它能帮你偷偷记录他/她的身影......

用料

- BW21-CBV-Kit x1

- SD 卡 x1

- 轻触按键 x2

- 绿色 LED(可选)x1

- 红色 LED(可选)x1

- 超声波测距传感器 x1

功能实现

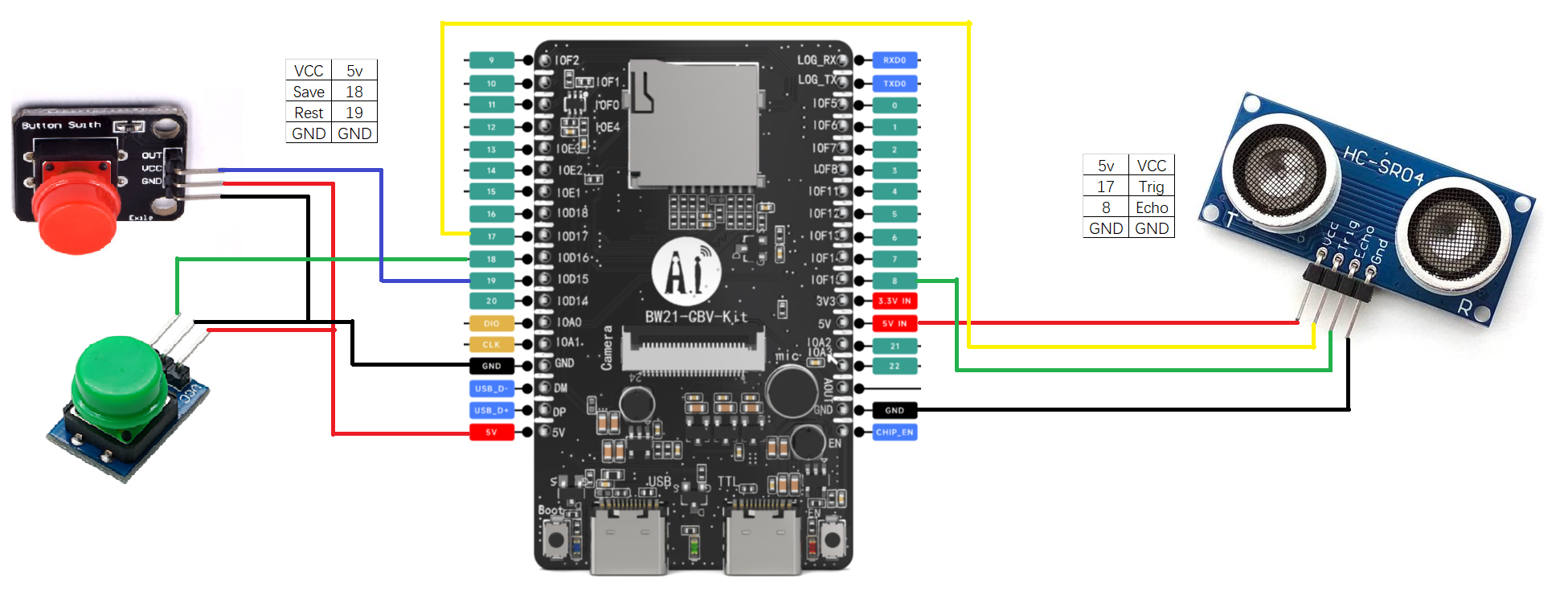

1、硬件接线

2、软件开发

软件使用Arduino,基于示例RTSPFaceRecognition进行修改。

代码详解

#include "WiFi.h"

#include "StreamIO.h"

#include "VideoStream.h"

#include "RTSP.h"

#include "NNFaceDetectionRecognition.h"

#include "VideoStreamOverlay.h"

#include <NTPClient.h>

#include <WiFiUdp.h>

#include "AmebaFatFS.h"

#include <NewPing.h>

#define TRIGGER_PIN 17

#define ECHO_PIN 8

#define MAX_DISTANCE 400

NewPing sonar(TRIGGER_PIN,ECHO_PIN,MAX_DISTANCE);

#define IOD18_PIN 18

#define IOD19_PIN 19

#define CHANNEL 0

#define CHANNELNN 3

// Lower resolution for NN processing

#define NNWIDTH 576

#define NNHEIGHT 320

float dis = 0.0;

String name = "";

uint32_t img_addr = 0;

uint32_t img_len = 0;

unsigned long startTime = 0; // 定义一个变量用于记录开始时间

bool GPIOisHigh = false; // 用于标记引脚是否已经变为高电平

bool facedetisTRUE = false; // 用于标记是否检测到人脸

AmebaFatFS fs;

VideoSetting config(VIDEO_FHD, CAM_FPS, VIDEO_JPEG, 1);

// VideoSetting config(VIDEO_FHD, 30, VIDEO_H264, 0);

VideoSetting configNN(NNWIDTH, NNHEIGHT, 10, VIDEO_RGB, 0);

NNFaceDetectionRecognition facerecog;

RTSP rtsp;

StreamIO videoStreamer(1, 1);

StreamIO videoStreamerFDFR(1, 1);

StreamIO videoStreamerRGBFD(1, 1);

char ssid[] = "你的wifi名"; // your network SSID (name) Hello World

char pass[] = "你的wifi密码"; // your network password jwz@123456

int status = WL_IDLE_STATUS;

WiFiUDP ntpUDP;

NTPClient timeClient(ntpUDP, "pool.ntp.org", 28800, 60000);

IPAddress ip;

int rtsp_portnum;

// 辅助函数,用于在数字小于 10 时前面补 0

String padWithZero(int num) {

return (num < 10) ? "0" + String(num) : String(num);

}

// 生成日期时间格式的文件名

String generateFileName() {

// 更新时间

timeClient.update();

// 获取时间戳

unsigned long epochTime = timeClient.getEpochTime();

Serial.println(epochTime);

// struct tm *ptm = gmtime ((time_t *)&epochTime);

time_t localTime = epochTime;

struct tm *ptm = localtime(&localTime);

// 提取年、月、日、时、分、秒

int year = ptm->tm_year + 1900;

int month = ptm->tm_mon + 1;

int day = ptm->tm_mday;

int hour = ptm->tm_hour;

int minute = ptm->tm_min;

int second = ptm->tm_sec;

// 格式化日期和时间

String fileName = String(year) + "-" + padWithZero(month) + "-" + padWithZero(day) + "_" +

padWithZero(hour) + "-" + padWithZero(minute) + "-" + padWithZero(second)+".jpg";

// 打印当前日期和时间

Serial.print("Current Date and Time: ");

Serial.println(fileName);

return String(fileName);

}

bool saveimage(){

fs.begin();

String fileName = generateFileName();

File file = fs.open(String(fs.getRootPath()) + String(fileName));

delay(1000);

Camera.getImage(CHANNEL, &img_addr, &img_len);

file.write((uint8_t *)img_addr, img_len);

file.close();

fs.end();

return true;

}

void setup()

{

Serial.begin(115200);

pinMode(IOD18_PIN, INPUT);

pinMode(IOD19_PIN, INPUT);

// attempt to connect to Wifi network:

while (status != WL_CONNECTED) {

Serial.print("Attempting to connect to WPA SSID: ");

Serial.println(ssid);

status = WiFi.begin(ssid, pass);

// wait 2 seconds for connection:

delay(2000);

}

ip = WiFi.localIP();

// 初始化 NTP 客户端

timeClient.begin();

// Configure camera video channels with video format information

// Adjust the bitrate based on your WiFi network quality

config.setBitrate(2 * 1024 * 1024); // Recommend to use 2Mbps for RTSP streaming to prevent network congestion

Camera.configVideoChannel(CHANNEL, config);

Camera.configVideoChannel(CHANNELNN, configNN);

Camera.videoInit();

// Configure RTSP with corresponding video format information

rtsp.configVideo(config);

rtsp.begin();

rtsp_portnum = rtsp.getPort();

// Configure Face Recognition model

// Select Neural Network(NN) task and models

facerecog.configVideo(configNN);

facerecog.modelSelect(FACE_RECOGNITION, NA_MODEL, DEFAULT_SCRFD, DEFAULT_MOBILEFACENET);

facerecog.begin();

facerecog.setResultCallback(FRPostProcess);

// Configure StreamIO object to stream data from video channel to RTSP

videoStreamer.registerInput(Camera.getStream(CHANNEL));

videoStreamer.registerOutput(rtsp);

if (videoStreamer.begin() != 0) {

Serial.println("StreamIO link start failed");

}

// Start data stream from video channel

Camera.channelBegin(CHANNEL);

// Configure StreamIO object to stream data from RGB video channel to face detection

videoStreamerRGBFD.registerInput(Camera.getStream(CHANNELNN));

videoStreamerRGBFD.setStackSize();

videoStreamerRGBFD.setTaskPriority();

videoStreamerRGBFD.registerOutput(facerecog);

if (videoStreamerRGBFD.begin() != 0) {

Serial.println("StreamIO link start failed");

}

// Start video channel for NN

Camera.channelBegin(CHANNELNN);

// Start OSD drawing on RTSP video channel

OSD.configVideo(CHANNEL, config);

OSD.begin();

}

void loop()

{

unsigned int uS = sonar.ping();

dis = uS/US_ROUNDTRIP_CM;

Serial.print("Ping:");

Serial.print(uS/US_ROUNDTRIP_CM);

Serial.println("cm");

if(digitalRead(IOD18_PIN) == HIGH){

name = "myself";

facerecog.registerFace(name);

}else if (digitalRead(IOD19_PIN) == HIGH) {

facerecog.resetRegisteredFace();

}

delay(2000);

OSD.createBitmap(CHANNEL);

OSD.update(CHANNEL);

}

// User callback function for post processing of face recognition results

void FRPostProcess(std::vector<FaceRecognitionResult> results)

{

uint16_t im_h = config.height();

uint16_t im_w = config.width();

Serial.print("Network URL for RTSP Streaming: ");

Serial.print("rtsp://");

Serial.print(ip);

Serial.print(":");

Serial.println(rtsp_portnum);

Serial.println(" ");

printf("Total number of faces detected = %d\r\n", facerecog.getResultCount());

OSD.createBitmap(CHANNEL);

if (facerecog.getResultCount() > 0) {

facedetisTRUE = true;

for (int i = 0; i < facerecog.getResultCount(); i++) {

FaceRecognitionResult item = results[i];

// Result coordinates are floats ranging from 0.00 to 1.00

// Multiply with RTSP resolution to get coordinates in pixels

int xmin = (int)(item.xMin() * im_w);

int xmax = (int)(item.xMax() * im_w);

int ymin = (int)(item.yMin() * im_h);

int ymax = (int)(item.yMax() * im_h);

uint32_t osd_color;

if (String(item.name()) == String("unknown")) {

osd_color = OSD_COLOR_RED;

} else {

osd_color = OSD_COLOR_GREEN;

}

// Draw boundary box

printf("Face %d name %s:\t%d %d %d %d\n\r", i, item.name(), xmin, xmax, ymin, ymax);

OSD.drawRect(CHANNEL, xmin, ymin, xmax, ymax, 3, osd_color);

// Print identification text above boundary box

char text_str[40];

snprintf(text_str, sizeof(text_str), "Face:%s", item.name());

OSD.drawText(CHANNEL, xmin, ymin - OSD.getTextHeight(CHANNEL), text_str, osd_color);

if (dis <= 50.0) {

if (!GPIOisHigh) {

// 检测距离小于50cm,记录开始时间

startTime = millis();

GPIOisHigh = true;

} else {

// 检查是否已经持续了 3 秒

if (millis() - startTime >= 3000 and item.name() == "myself") {

saveimage();

Serial.print("----------photo save complete!------------ ");

while (dis <= 50.0); //一直小于50cm不重复记录

GPIOisHigh = false;

}

}

} else {

// 离开后,重置标记

GPIOisHigh = false;

}

}

}else{

facedetisTRUE = false;

}

OSD.update(CHANNEL);

}

1、人脸监测识别

送电后开始实时检测人脸,首先要将摄像头对准自己,并且自己与测距传感器的距离小于50cm,然后按下绿色按钮,此时系统会录入自己人脸并命名为"myself",这样当自己坐在工位时就不会录入自己信息了(如果连自己的离开和坐下的时间都要记录,可以将 if (millis() - startTime >= 3000 and String(item.name()) != "myself") 条件里的后半部分去掉)。

陌生人坐下会检测为“unknow”,距离够近后延时3秒钟开始拍照存储。

通过按红色按钮可删除掉记录的人脸,通过绿色按钮重新录入。

2、距离监测

接入超声波测距传感器,根据自己工位及所需检测的距离自行调整。

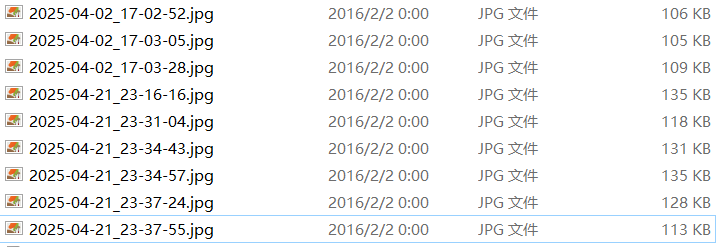

3、以时间命名保存图片

通过wifi联网后,获取NTP时间

保存图片以当前时间命名,格式为YYYY-MM-DD_hh-mm-ss.jpg。

注意内容

其中使用人脸检测录入是获取摄像头的视频流

VideoSetting config(VIDEO_FHD, 30, VIDEO_H264, 0);

此模式下无法直接获取图片,因此将摄像头定义为图片帧格式

VideoSetting config(VIDEO_FHD, CAM_FPS, VIDEO_JPEG, 1);

功能拓展

此方式只将图片保存到存储卡中,若想实时或每天远程查看检测图片,可通过MQTT连接云端服务器,将图片上传即可,这样就可以实时看到谁光顾你的工位了。